Table of Contents

Artificial Neural Networks

Natural neural networks

This is the drawing of a natural neural network.

Artificial neural networks are based on natural neural networks.

- Natural neural networks use “spikes” of energy to transmit information from one neuron to the other.

- Natural neural networks use also chemical reactions to transmit information from one neuron to the other.

Artificial neural networks

- Artificial neural networks (the clasical ones) use constant values in all the interconnections.

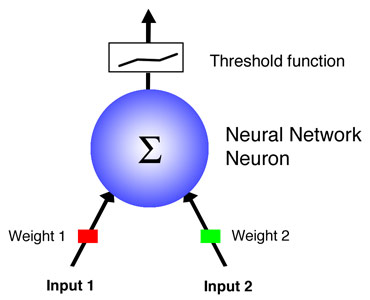

- They are composed of “n” inputs and 1 output.

- Depending on the neural neuron selected you can use real values in the inputs and you get a real value in the output.

- The neural neuron action is about calculating the output adding together all the input values each of them weighted to an “appropriate” percentage and them applying a especial threshold function.

O = f(∑(w⋅X))

Where “O” is the output of the neuron, “f” is the threshold function, “w” is the weight and X is each input. This neuron is call perceptron.

- To get one usefull neural network is going to be necessary to use more the one perceptron. For example:

Is normal to connect all the output of the first level of neurons to all the inputs of the next level, and so on.

Learning

- If you want to change the behavior of the neuron you just need to change the “w” weights or/and the threshold function.

- Normally the threshold function is selected a priori and the the weights are “learn” dinamically.

- To teach a perceptron you need to choose the “best” weights. Best means the appropiate values that gives the perceptron enough acuracy and at the same time good capabilities of generalization.

- You can do this if you have a set of examples with inputs and the corresponding output.

- Then you can change the weight “w” by “w=w+Δw” where “Δw=η(O-o)x. “O” is the expected output, “o” is the current output, η is the learning rate constant. Is necesary to apply this fine-tunning to “w” several times until the error is aceptable.

Then you have to apply this when you have more than one neuron. For this there is an algorithm called backpropagation

- Backpropagation in short apply the same (more or less) method described above for each neuron in the whole network. First it needs to start from the output level of neurons, in this way the algorithm is able to calculate the value in the inputs of the next inner level, and so on, until the input level of neurons is trained. This algorithm repeats this procedure several times until the acuracy is acceptable.

- Training more than necessary could affect the performance of the network for generalization. So the network will be less prepare to unexpected situations.

fastdev/neural_networks.txt · Last modified: by memeruiz · [Old revisions]